Several technology companies have signed an agreement outlining processes for the development of AI.

The companies include Amazon, Google, Meta, Microsoft, OpenAI, Anthropic, and Inflection.

The agreement will mean that their AI products are safe before they release them to the public.

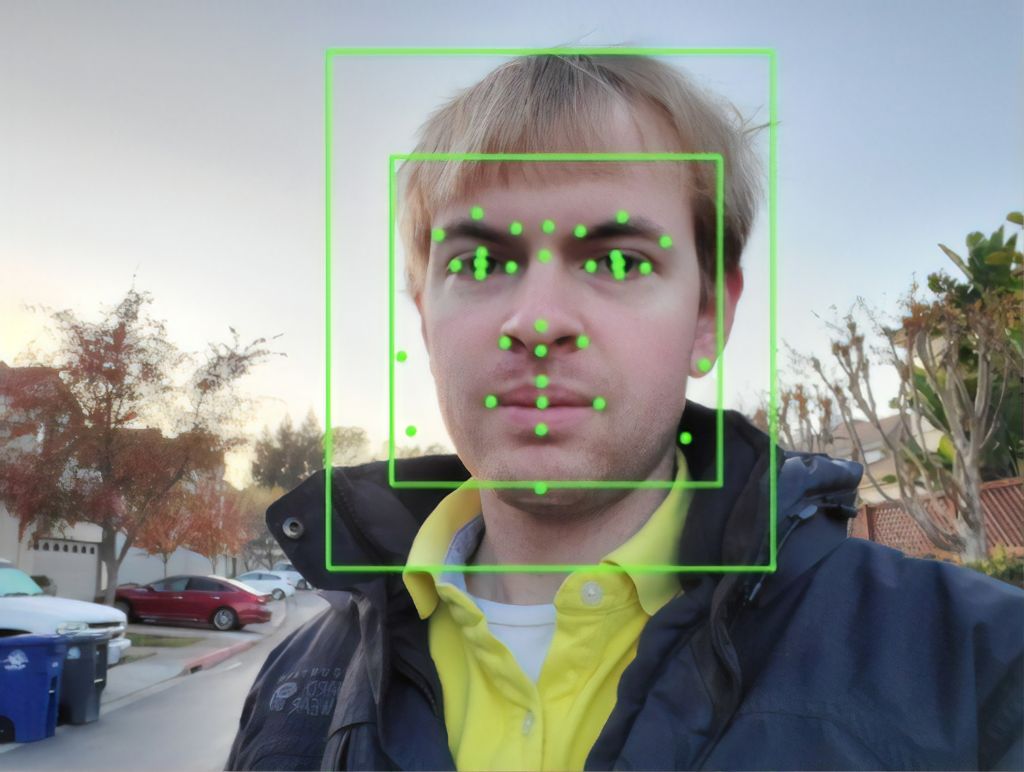

The commitments include security testing, reporting vulnerabilities, and using digital watermarking to help distinguish between real and AI-generated images or audio.

The companies will also publicly report flaws and risks in their technology, including effects on fairness and bias.

The voluntary commitments are meant to be an immediate way of addressing risks ahead of a longer-term push to get Congress to pass laws regulating the technology.

Some advocates for AI regulations said this move is a start but more needs to be done to hold the companies and their products accountable.

Microsoft president Brad Smith said in a blog post today that his company is making some commitments that go beyond the White House pledge, including support for regulation that would create a “licensing regime for highly capable models”.

WHY DO THEY MAKE THEM SO EXPENSIVE JUST GET THE BATTERY CHIKENS AND PUT THEM IN THE WILD!